In this mini-series I look at building a minimally functional XML parser. Last time I used Haskell’s Parsec library. This time use Perl and the Regexp::Grammars to build an analogous XML parser.

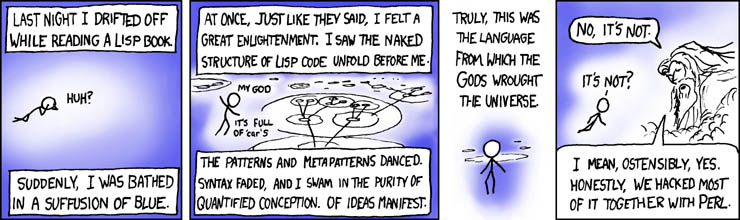

Cartoon from xkcd

Introduction

I recently attended Damian Conway’s understanding regular expressions tutorial and picked up some useful tips. On eof the most useful of these was to check out Damian’s Regexp::Grammars module on the CPAN. It adds some important features to Perl’s regular expression capabilities, enabling you to build a proper recursive descent parser out of regular expressions. Now, that may seem hopelessly scary if you have ever tried to build a regexp to do anything moderately complicated, but some nifty features in the modern Perl regexp engine, complemented by some help from Regexp::Grammars actually made the whole process quite painless.

A quick reminder that this is just a toy XML parser for my own coding amusement and general edification. If you are using Perl to do stuff with XML, you should use one of the many fine XML modules on the CPAN. I find XML::Simple more than adequate for most of my XML needs — which are, indeed, mostly simple. Readers should be aware that I work daily with Perl and am a mere acolyte when it comes to Haskell.

Getting started, defining the problem

As I did in the last installment, I will make a trivial XML parser. The spec is similar to that spec, an xml document is made of elements. As I said of the Haskell/Parsec version that In particular I will treat all character data as Strings, and I won’t care about chardata blocks or doctype declarations. I will, however, deal with xml text declarations (i.e. <?xml version="x.y" encoding="ZZZ-Z" ?>) and self closing tags (i.e. <mytag />)

. There is more detail in part one, so let’s jump straight in.

I started out this time by reading the excellent module documentation for Regexp::Grammars. As you may know, Perl since 5.10 has been capable of recursively matching regular expressions. However, being able then to extract and use that data was not possible until Regexp::Grammars was written. To quote the docs, In other words, using Perl 5.10 you can match complex data, but not parse it into an internally useful form.

You can match complex data, but not parse it into an internally useful form…

In addition, Regexp::Grammars obviates the need to keep explicitly matching whitespace which is a PITA. A 20 minute perusal of the docs, and a bit of typing gave me enough of a grasp of the way that my solution would work to type out a first draft. My first take matched self closing tags and XML text declarations, but struggled with attributes and with "normal" tags (which can be recursive). This was mostly my fault for rushing my coding and not digesting properly the docs. After another little while I had completed my grammar, which is pretty much the whole parser! Here it is.#!/usr/bin/perl

use strict;

use warnings;

use utf8;

use 5.10.0;

use Data::Dumper;

use Regexp::Grammars;

$Data::Dumper::Indent = 1;

my $parser = qr{

<logfile: parser_log > # Log description of the grammar

<nocontext:> # Switch off debugging noise

<Document> # Define a document

<rule: Document> <[Element]>* # Contains many elements

<rule: Element> <XMLDecl> # Which can be XML declarations,

| <SelfClosingTag> # OR self closing tags

| <NormalTag> # OR normal tags

<rule: XMLDecl> \<\?xml <[Attribute]>* \?\> # An xml can have zero or more attributes

<rule: SelfClosingTag> \< <TagName> <[Attribute]>* / \> # A self closing tag similarly

<rule: NormalTag> \< <TagName> <[Attribute]>* \> # A normal tag can also have attributes

<TagBody>? # And a body

<EndTag(:TagName)> # And an end tag named the same

<token: TagName> [^\W\d][^\s\>]+ # A name begins with a non-digit non-non word char

<rule: EndTag> \< / <:TagName> \> # An end tag is a tagname in <>s with a leading /

<rule: TagBody> <[NormalTag]>* # A tag body may contain normal tags

| <[SelfClosingTag]>* # OR self closing tags

| <Text> # OR text

# note that NormalTags are recursive.

<rule: Text> [^<]+ # Text is one or more non < chars

<rule: Attribute> <AttrName> = \" <AttrValue> \" # An attribute is a key="value"

<token: AttrName> [^\W\d][^\s]+ # Attribute names defined similarly to tag names

<token: AttrValue> [^"]+ # Attribute values are series of non " chars

}xms;There is a lot to digest here. I start by importing my normal mosules and RegexpGrammars. I set the Data::Dumper indentation a little narrower than the default, which I prefer. Next, I tell Regexp::Grammar to log what it is doing, of which more shortly, then we switch off the context feature, which adds a copy of the current matching context to each entry in the data structure that is returned by the parser. Now we move on to define an XML Document, I shall let the comments explain the majority of the grammar. It is very similar to the one I developed with Parsec. Writing this felt almost like constructing a data type in Haskell. But the genius thing about working with regexen is that once I was able to describe my grammar, the heavy lifting was done. The rest of the code I would write was needed merely to call my regular expression on a string or the contents of a file.

The logfile feature is very nice and helped me debug some performance probms I hit whilst building my grammar. As you can see, it spits out a complete and extremely helpful description explaining what you have said in your grammar (which may or may not be what you meant!).$ cat parser_log

info | Processing the main regex before any rule definitions

| |

| |...Treating <Document> as:

| | | match the subrule <Document>

| | \ saving the match in $MATCH{'Document'}

| |

| \___End of main regex

|

| Defining a rule: <Document>

| |...Returns: a hash

| |

| |...Treating <[Element]>* as:

| | | match the subrule <Element> any number of times

| | \ appending each match to $MATCH{'Element'}

| |

| |...Treating '# Contains many elements' as:

| | \ a comment (which will be ignored)

| |

| \___End of rule definition

|

| Defining a rule: <Element>

| |...Returns: a hash

| |

| |...Treating <XMLDecl> as:

| | | match the subrule <XMLDecl>

| | \ saving the match in $MATCH{'XMLDecl'}

| |

| |...Treating '|' as:

| | \ normal Perl regex syntax

| |

| |...Treating <SelfClosingTag> as:

| | | match the subrule <SelfClosingTag>

| | \ saving the match in $MATCH{'SelfClosingTag'}

| |

| |...Treating '|' as:

| | \ normal Perl regex syntax

| |

…

Adding some glue

At this point we can apply our parser and get back a data structure called %/ (which is a very Perlish name indeed) which recursively represents our data structure as an array of hashes of hashes of arrays of hashes and so on. That is as useful as it sounds so I spent some time making a search_collection sub that would let me search for a particular key whose value matched a string I specified. Its rather gnarly as it must deal with arrays and hashes recursively. As you can see, getting the data out of the tree was much harder than it was in Haskell, even though getting it into a tree in the first place was, to my mind rather more elegant.sub search_collection {

my($collection,$target_key,$target_val,$results) = @_;

if (ref $collection eq "ARRAY") {

for (@{$collection}) {

$results = search_collection($_,$target_key,$target_val,$results);

}

return $results;

}

for (keys %{$collection}) {

my $value = $collection->{$_};

if (ref($value) eq 'HASH' || ref($value) eq 'ARRAY') {

$results = search_collection($value,$target_key,$target_val,$results);

}

if(uc $_ eq uc $target_key && uc $value eq uc $target_val) {

push @$results, $collection;

}

}

return $results;

}

I look at the collection to see if it is an array. If it is I call my search on each element of the array and return the accumulated results. Otherwise, I have probably got an hash. I go through the keys of the hash, looking at their values (its necessary to look at all the keys rather than just the one I care about, because the values may in themselves be hashes or arrays). If the value is indeed and array or hash, I call the sub recursively on the value and accumulate the results. In addition, if the key and value are case-insensitively equal to the ones I am looking for, I push the whole collection into my accumulated results. Finally I return the results arrayref. Whew!

Now that I can find tags in my parse tree, I add some skeleton wrapper code to my program to deal with opening a source file, applying the grammar and so on. At the top of the file I adddie "Needs path to an xml file" unless (@ARGV==1);

my $filename = $ARGV[0];

open my $in,"<",$filename

or die "Feck: $! on $filename";

my $xml_file = do {local $/; <$in>};

That do block is a Perlish way to slurp a file into a string.

Below my grammar, I add a small block to actually apply the parser to the string I’ve slurped from the file.my $parsed_xml;

if ($xml_file =~ $parser) {

$parsed_xml = \%/;

}

else {

die "Can't parse xml!\n" . @!;

}

#print Dumper $parsed_xml;I have commented out my testing statement, as you can see. @! is an array of failed regexp applications that Regexp::Grammars collects for debugging purposes. %/ is the tree that is built by applying our grammar. And the leading \ makes it into an hashref which can be used with our search_collection sub. I shall add a couple more lines to the program, to do the same task as we did with the Haskell version -- pulling links out of an RSS feed that we have downloaded.

my $link_tags = search_collection($parsed_xml, "TagName", "link");

map { say $_->{TagBody}{Text} } @$link_tags;

We call search collection on our tree, looking for tags named link. We call map on this collection to pull the text out of the body of the tags for this is where the URLs we so devotedly covet are to be found. And we are done. Here is a truncated sample run.$ ./xml_parser.pl register.rss | head -n10

http://www.theregister.co.uk/

http://www.theregister.co.uk/

http://go.theregister.com/feed/www.theregister.co.uk/2012/10/13/android_ondevice_malware_scanning/

http://go.theregister.com/feed/www.theregister.co.uk/2012/10/13/spacex_satellite_fail/

http://go.theregister.com/feed/www.theregister.co.uk/2012/10/13/apple_misappropriates_swiss_photographers_image/

http://go.theregister.com/feed/www.theregister.co.uk/2012/10/12/myhrvold_patent_3d_printing/

http://go.theregister.com/feed/www.theregister.co.uk/2012/10/12/ftc_nearing_google_antitrust_decision/

http://go.theregister.com/feed/www.theregister.co.uk/2012/10/12/endeavor_trundles_through_the_streets_of_los_angeles/

http://go.theregister.com/feed/www.theregister.co.uk/2012/10/12/apple_licenses_swiss_clock/

http://go.theregister.com/feed/www.theregister.co.uk/2012/10/12/win8_preorder_pricing_details/

…

Conclusions

My Perl implementation of the XML parser was certainly shorter and arguably a little easier to read, at least the actual parsing part was! Extracting the data was rather more convenient in Haskell, though there were still the wrinkles of having to jump into the IO monad when dealing with files. This may be the right thing, but it doesn’t feel like the easy thing.

I shall certainly be using the new regular expression features like named captures in future…

I was able to construct a reasonable toy XML parser rather easily in both languages and I would feel confident implementing something more substantial were HXT and the various Perl XML modules not to exist. Both Regexp::Grammar and Parsec seem like fantastic tools for constructing domain specific languages (DSLs) — Haskell’s strict typing may give it an edge here. Haskell also encouraged me to structure my code in a more library-like fashion. I felt the Perl version was far more scripty. That isn’t to say that I couldn’t have built a Perl module of course, it just didn’t feel quite so straightforward. I think a major factor at play here is the GHCi REPL (that’s Read Evaluate Print Loop), which makes testing functions a breeze. The other big factor is that it is very hard to do anything in Haskell without making a function, there being no assignment or side effects!

Compared to my Perl version, which took only an hour or two, Haskell development time was much longer, the best part of a Saturday. This was partly due to my having a lot less experience with Haskell, but also because the documentation of Regexp::Grammars was much fuller and, for me anyhow, easier to follow. However, either system would allow me to turn round a DSL quickly and without my brain exploding. Both the Perl and Haskell communities have excellent and friendly suport communities on Freenode’s IRC channels and there is normally some lovely person on there to answer my daft questions!

Building a little XML parser with Regexp::Grammars has given me a new appreciation of the power of regular expressions and, even if I don’t need to use all the power from the module itself, I shall certainly be using the new regular expression features like named captures in future Perl projects. Working with Haskell and Parsec gave me an insight into approaching a program from a functional perspective and working through it in a thoroughly recursive manner. It has also started to help me feel more comfortable with using monads in my Haskell code, which has to be good.

Thanks for reading

You can download the code for my Perl XML parser, and read Part one: Haskell and Parsec if you like.