Ages ago I made a little form that made it easier to get your twitter feed as an RSS. It just called the Twitter API and it was slightly useful for a few people, which was great. However, Twitter killed their old API and broke various things I’d made that pulled RSS feeds of user streams.

So, I did like anyone would and wrote a little perl script to screenscrape Twitter and generate an RSS feed on the fly from that. Fine. And then I pointed my form at it and thought no more about it. Until one day the server where the script lived died…

One day the server where the script lived died…

I’d hosted the script on OX4, which runs on a bytemark vm with a gigabyte of RAM. The server had a couple of problems running out of memory and CPU when it started getting a lot of concurrent requests. OX4 doesn’t log trafic to any of its sites so I didn’t have much to go on debugging-wise. But I could watch top as requests to the cgi poured in.

Here is the story of how I made my little cgi script scale to a million requests a month.

Start logging

My first response was to try and get some more data on what was happening. I had perl print out the username being requested and the time of the request to a file.open my $out, '>>', 'log.txt' or die 'No twitter rss uses file';

print $out (localtime time) . "$user\n";

close $out;

I could then sort the file in bash and see who was doing what. This command uses perl to strip out the time and date from the log, then sorts the output, so that identical usernames will be next to each other. This is then piped to uniq -c which merges identical adjacent lines and prefixes them with a count. Finally, I pipe this to sort -n which sorts numerically, so the heavier users appear at the end of the output.

$ perl -pe 's/.* //' log.txt | sort | uniq -c | sort -n

…

131 sportsmoneyblog

132 crossvilleinc1

132 theanimalspost

132 wvmetronews

140 nytimes

152 breakingnews

154 assyrianapp

173 sfmixology

173 wtaptelevision

199 ciderpunx

Varnish caching

For a start I focussed on some of the heavier users, like that idiot @ciderpunx. I was getting a lot of repeat requests for some accounts, so I started 500ing those users after asking them to keep a local cache. This helped. For a bit.

But it wasn’t really a solution. I needed to do some caching. Fortunately, OX4’s infrastructure is behind a varnish cache. I just had to set a cache-control header. A single line change was enough to have varnish cache for half an hour. say "Cache-control: max-age:3600";I also did some twidding with the request parameters to strip out irrelevant stuff and make the pages more cacheable. sub vcl_recv {

if (req.url ~ "/cgi-bin/twitter_user_to_rss.pl") {

set req.url = regsub(req.url, "&fetch=.?Fetch.RSS.?", "");

}

…

Moving to FastCGI

Now everything was getting cached for half an hour. The memory was still a problem, but the CPU spikes not so much. About this time I got a domain name for the project and TwitRSS.me was born. I next wanted to focus on the memory consumption. The script was a CGI script. Now when a CGI gets called, Apache launches a process and this uses some server resources. This happens each time the CGI is requested, and creates a fair bit of load. So I ported my script to fastcgi using CGI::Fast, which made it super easy to port the code. It is a subclass of CGI, so all your usual calls in perl work as expected. You just wrap the script in a while loop like thiswhile (my $q = CGI::Fast->new) {

…

}

Of course, Apache now had to be configured. I installed mod_fastcgi and added some code to the vhost config. <Directory /var/www/twitrss.me/>

Options +ExecCGI -MultiViews

AddHandler fastcgi-script .fcgi

DirectoryIndex dispatch.fcgi index.html

<Directory>

ScriptAlias /twitter_user_to_rss/ /var/www/twitrss.me/cgi-bin/twitter_user_to_rss.pl

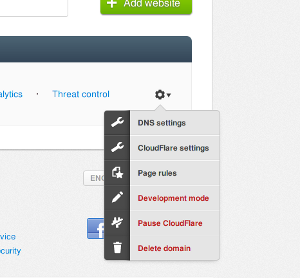

Using Cloudflare

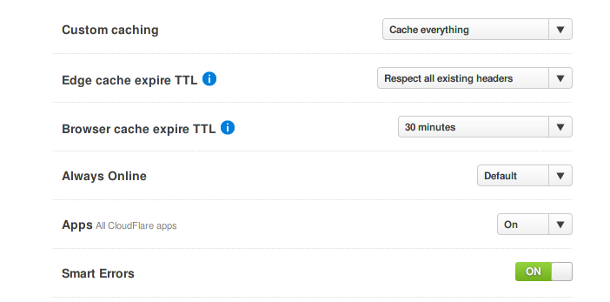

Using fastcgi helped a lot with server load. But I was still using a lot of memory to cache all the pages. Cloudflare is a reverse proxy that you can put in front of your website to speed it up. They offer free and premium plans. I set up a free account and spent a while working out how to have it respect the cache-control headers I was sending and not hit my script with every request. The secret is to use the page rule feature. I set a global rule for the whole domain using the regex syntax and set cache everything in Edge cache expire TTL.

Now cloudflare is setup, the big cache is their problem rather than mine. And the bandwidth is theirs and not mine. Which is how I like it.

My last change was to have heavier users get a longer cache timeout, which seemed fair. I put them into a file and have my script read that and give a 24-hour max age rather than the default half-hour for those users. Because cloudflare is configured to respect that header, it caches everything for 24 hours for those users. Which further reduces my server load.

Conclusion

Now that I have done some work on performance TwitRSS.me is working pretty well. I have moved the hosting and built a frontend for the script so that it looks a bit professional. I have also set up a paypal so I can get donations towards the hosting costs and towards buying a pro cloudflare account, which seems like the ethical thing to do given that they are handling a lot of the load. It will be interesting to see how things develop in the future.